LLNL-led team uses machine learning to derive black hole motion from gravitational waves

(Download Image)

(Download Image)

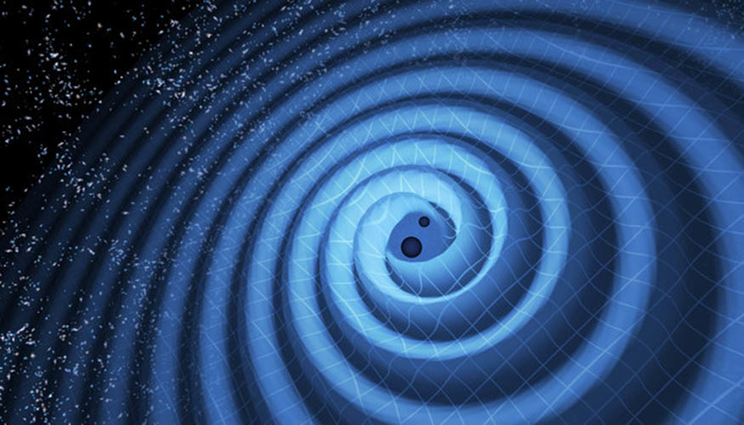

A multidisciplinary team including an LLNL mathematician has discovered a machine learning-based technique capable of automatically deriving a mathematical model for the motion of binary black holes from raw gravitational wave data. Gravitational waves are produced by cataclysmic events such as the merger of two black holes, which ripple outward as the black holes spiral toward each other and can be detected by installations such as the Laser Interferometer Gravitational-wave Observatory (LIGO). Image credit: LIGO/T. Pyle.

The announcement that the Laser Interferometer Gravitational-wave Observatory (LIGO) had detected gravitational waves during the merger of two black holes sent ripples throughout the scientific community in 2016. The earthshaking news not only confirmed one of Albert Einstein’s key predictions in his general theory of relativity, but also opened a door to a better understanding of the motion of black holes and other spacetime-warping phenomena.

Cataclysmic events such as the collision of black holes or neutron stars produce the largest gravitational waves. Binary black holes orbit around each other for billions of years before eventually colliding to form a single massive black hole. During the final moments as they merge, their mass is converted to a gigantic burst of energy — per Einstein’s equation e=mc2 — which can then be detected in the form of gravitational waves.

To understand the motion of binary black holes, researchers have traditionally simplified Einstein’s field equations and solved them to calculate the emitted gravitational waves. The approach is complex and requires expensive, time-consuming simulations on supercomputers or approximation techniques that can lead to errors or break down when applied to more complicated black hole systems.

Along with collaborators at the University of Massachusetts, Dartmouth and the University of Mississippi, a Lawrence Livermore National Laboratory (LLNL) mathematician has discovered an inverse approach to the problem, a machine learning-based technique capable of automatically deriving a mathematical model for the motion of binary black holes from raw gravitational wave data, requiring only the computing power of a laptop. The work appears online in the journal Physical Review Research.

Working backward using gravitational wave data from numerical relativity simulations, the team designed an algorithm that could learn the differential equations describing the dynamics of merging black holes for a range of cases. The waveform inversion strategy can quickly output a simple equation with the same accuracy as equations that have taken humans years to develop or models that take weeks to run on supercomputers.

“We have all this data that relates to more complicated black hole systems, and we don’t have complete models to describe the full range of these systems, even after decades of work,” said lead author Brendan Keith, a postdoctoral researcher in LLNL’s Center for Applied Scientific Computing. “Machine learning will tell us what the equations are automatically. It will take in your data, and it will output an equation in a few minutes to an hour, and that equation might be as accurate as something a person had been working on for 10-20 years.”

Keith and the other two members of the multidisciplinary team met at a computational relativity workshop at the Institute for Computational and Experimental Research in Mathematics at Brown University. They wanted to test ideas from recent papers describing a similar type of machine learning problem — one that derived equations based on trajectories of a dynamical system — on lower-dimensional data, like that of gravitational waves.

Keith, a computational scientist in addition to being a mathematician, wrote the inverse problem and the computer code, while his academic partners helped him obtain the data, and added the physics needed to scale from one-dimensional data to a multi-dimensional system of equations and interpret the model.

“We had some confidence that if we went from one dimension to one dimension, it would work — that’s what the earlier papers had done — but a gravitational wave is lower-dimensional data than the trajectory of a black hole,” Keith said. “It was a big, exciting moment when we found out it does work.”

The approach doesn’t require complicated general relativity theory, only the application of Kepler’s laws of planetary motion and the math needed to solve an inverse problem. Starting with just a basic Newtonian, non-relativistic model (like the moon orbiting around the Earth) and a system of differential equations parameterized by neural networks, the team discovered the algorithm could learn from the differences between the basic model and one that behaved much differently (like two orbiting black holes) to fill in the missing relativistic physics.

“This is a completely new way to approach the problem,” said co-author Scott Field, an assistant professor in mathematics and gravitational wave data scientist at the University of Massachusetts, Dartmouth. “The gravitational-wave modeling community has been moving towards a more data-driven approach, and our paper is the most extreme version of this, whereby we rely almost exclusively on data and sophisticated machine learning tools.”

Applying the methodology to a range of binary black hole systems, the team showed that the resulting differential equations automatically accounted for relativistic effects in black holes such as perihelion precession, radiation reaction and orbital plunge. In a side-by-side comparison with state-of-the-art orbital dynamics models that the scientific community has used for decades, the team discovered their machine learning model was equally accurate and could be applied to more complex black hole systems, including situations with higher dimension data but a limited number of observations.

“The most surprising part of the results was how well the model could extrapolate outside of the training set,” said co-author Akshay Khadse, a Ph.D. student in physics at the University of Mississippi. “This could be used for generating information in the regime where the gravitational wave detectors are not very sensitive or if we have a limited amount of gravitational wave signal.”

The researchers will need to perform more mathematical analysis and compare their predictions to more numerical relativity data before the method is ready to use with current gravitational data collected from the LIGO installations, the team said. They hope to devise a Bayesian inversion approach to quantify uncertainties and apply the technique to more complicated systems and orbital scenarios, as well as use it to better calibrate traditional gravitational-wave models.

The work was performed with a grant from the National Science Foundation and funding from LLNL.

Contact

Jeremy Thomas

Jeremy Thomas

[email protected]

(925) 422-5539

Related Links

Physical Review ResearchTags

PhysicsHPC, Simulation, and Data Science

Computing

Engineering

Physical and Life Sciences

Science

Featured Articles