LLNL team reaches milestone in power grid optimization on world’s first exascale supercomputer

(Download Image)

(Download Image)

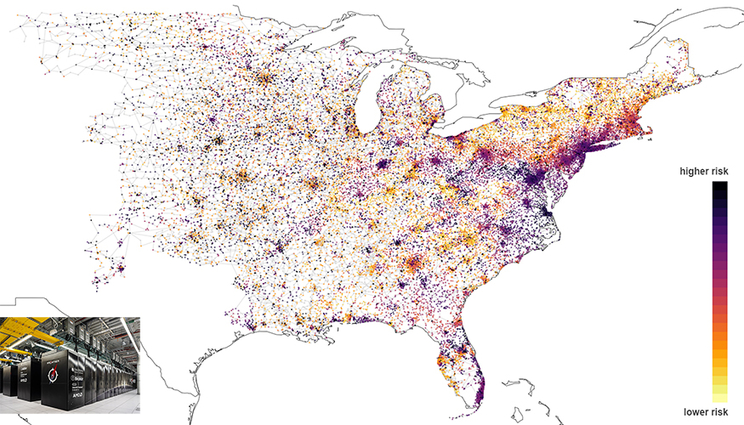

As part of the Exascale Computing Project’s ExaSGD project, Laboratory researchers ran HiOp, an open-source optimization solver, on 9,000 nodes of Oak Ridge National Laboratory’s Frontier exascale supercomputer. In the largest simulation of its kind to date, Frontier allowed researchers to determine safe and cost-optimal power grid setpoints over 100,000 possible grid failures (also called contingencies) and weather scenarios in just 20 minutes. Pictured is a heatmap of the risk of equipment overload for a 70,000-bus synthetic system, representative of the eastern United States, with under 20,000 contingencies. Graphic courtesy of Cosmin Petra.

Ensuring the nation’s electrical power grid can function with limited disruptions in the event of a natural disaster, catastrophic weather or a manmade attack is a key national security challenge. Compounding the challenge of grid management is the increasing amount of renewable energy sources such as solar and wind that are continually added to the grid, and the fact that solar panels and other means of distributed power generation are hidden to grid operators.

To advance the modeling and computational techniques needed to develop more efficient grid-control strategies under emergency scenarios, a multi-institutional team has used a Lawrence Livermore National Laboratory (LLNL)-developed software capable of optimizing the grid’s response to potential disruption events under different weather scenarios, on Oak Ridge National Laboratory (ORNL)’s Frontier supercomputer. Frontier recently achieved a milestone of running at exascale speeds of more than one quintillion calculations per second.

As part of the Exascale Computing Project’s ExaSGD project, researchers at LLNL, ORNL, the National Renewable Energy Laboratory (NREL) and the Pacific Northwest National Laboratory ran HiOp, an open-source optimization solver, on 9,000 nodes of the Frontier machine. In the largest simulation of its kind to date, Frontier allowed researchers to determine safe and cost-optimal power grid setpoints over 100,000 possible grid failures (also called contingencies) and weather scenarios in just 20 minutes. The project emphasized security-constrained optimal power flow, a reflection of the real-world voltage and frequency restrictions the grid must operate within to remain safe and reliable.

“Because the list of potential power grid failures is large, this problem is very computationally demanding,” said computational mathematician and principal investigator for LLNL Cosmin Petra. “The goal of this project was to show that the exascale computers are capable of exhaustively solving this problem in a manner that is consistent with current practices that power grid operators have.”

Today, grid operators are only capable of solving approximations of potential failures, and the decisions on how to handle emergency situations usually require a human, who may or may not be able to determine how to optimally keep the grid up and running under different renewable energy forecasts, according to Petra. For comparison, system operators using commodity computing hardware typically consider only about 50 to 100 hand-picked contingencies and 5-10 weather scenarios.

“It is a massive jump in terms of computational power,” Petra said. “We showed that the operations and planning of the power grid can be done under an exhaustive list of failures and weather-related scenarios. This computational problem may become even more relevant in the future, in the context of extreme climate events. We could use the software stack that ran on Frontier to minimize disruptions caused by hurricanes or wildfires, or to engineer the grid to be more resilient in the longer run under such scenarios, just to give an example.”

HiOp, also used by Lab engineers for design optimization, parallelizes optimization by using a combination of specialized linear algebra kernels and optimization decomposition algorithms. The latest version of HiOp contains several performance improvements and new linear algebra compression techniques that helped improve the speed of the open source software by a factor of 100 on the exascale machine’s GPUs over the course of the project.

The team “was able to utilize these GPUs increasingly better, surpassing CPUs in many instances, and that was quite an achievement given the sparse, graph-like nature of our computations,” Petra said.

The Frontier runs were validated by colleagues at PNNL using industry-standard tools, showing that the computed pre-contingency power setpoints drastically reduce the post-contingency outages with minimal increase in the operation cost.

“The pressing question is, 'What is the cost benefit of our HPC optimization software for grid operators?'” Petra said.

The LLNL team has solved grid-operator problems in a previous project, the ARPA-E Grid Optimization Challenge 1 Competition, and obtained the best setpoints among all the participants. Petra added that “unfortunately, we were not able to do a cost-benefit analysis for the grid operator problems due to confidentiality restrictions. I would say that a 5% improvement in operations cost justifies a high-end parallel computer, while anything less than 1% improvement will likely require downsizing the scale of computations. But we really do not know at this moment.”

Since the optimization software stack is open-source and lightweight, grid system operators could downsize the technology and incorporate it into their current practices on commodity HPC systems in a cost-effective manner.

Petra said the opportunity to be among the first teams to run on the world’s first exascale system in Frontier could not have been accomplished without the close collaboration with teams in ECP and support from the Oak Ridge Leadership Computing Facility. The ExaSGD project also included researchers from Argonne National Laboratory.

“We’ve been working toward exascale for the last four years. We were lucky to face a class of problems with very rich parallelization opportunities, but we also correctly anticipated that we need to keep the communication pattern as simple as possible to avoid porting, scalability, and deployment bottlenecks later on exascale machines.”

Jingyi “Frank” Wang, a computer scientist in the Uncertainty Quantification and Optimization Group in LLNL’s Center for Applied Scientific Computing, and Petra developed a new optimization algorithm that uses sophisticated mathematics to simplify the communication footprint on Frontier while maintaining good convergence properties, helping the team achieve the exascale feat. The LLNL team also included research engineer Ignacio Aravena Solis and computational mathematician Nai-Yuan Chiang.

Petra, who also leads a Laboratory Directed Research and Development project in contact design optimization, said he hopes the team can engage more closely with the power industry grid stakeholders and draw in more HiOp users for optimization at LLNL, with the goal of parallelizing those computations and bringing them into the realm of HPC.

Contact

Jeremy Thomas

Jeremy Thomas

[email protected]

(925) 422-5539

Related Links

Exascale Computing Project’s ExaSGDFrontier supercomputer

Tags

ASCHPC, Simulation, and Data Science

Computing

Energy

Featured Articles