LLNL and SambaNova Systems announce additional AI hardware to support Lab’s cognitive simulation efforts

(Download Image)

(Download Image)

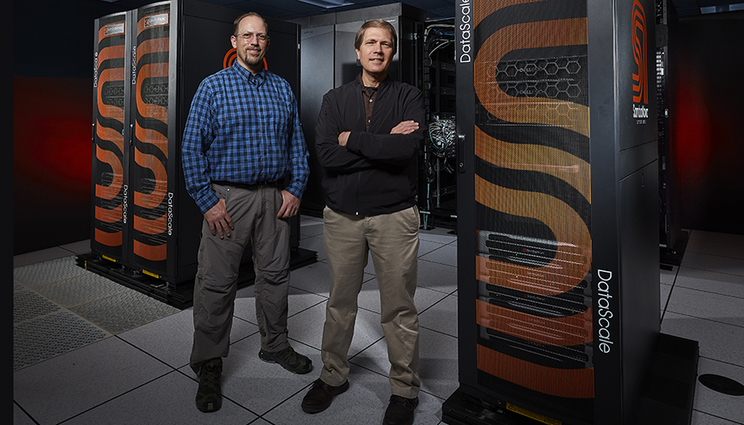

LLNL Informatics Group Leader Brian Van Essen (left) and Bronis R. de Supinski, Chief Technology Officer for Livermore Computing, stand by the new SambaNova artificial intelligence hardware in the Livermore Computing Center. Photos by GarryMcLeod/LLNL.

Lawrence Livermore National Laboratory (LLNL) and SambaNova Systems have announced the addition of SambaNova’s spatial data flow accelerator into LLNL’s Livermore Computing Center, part of an effort to upgrade the Lab’s cognitive simulation (CogSim) program.

LLNL will integrate the new hardware to further investigate CogSim approaches combining artificial intelligence (AI) with high performance computing (HPC) — and how deep neural network hardware architectures can accelerate traditional physics-based simulations as part of the National Nuclear Security Administration’s (NNSA’s) Advanced Simulation and Computing program. The Lab is expected to use the SambaNova AI systems to improve the fidelity of models and manage the growing volumes of data to improve overall speed, performance and productivity for stockpile stewardship applications, fusion energy research and other basic science work.

"Multi-physics simulation is complex,” said LLNL Informatics Group Leader Brian Van Essen. “Our inertial confinement fusion (ICF) experiments generate huge volumes of data. Yet, connecting the underlying physics to the experimental data is an extremely difficult scientific challenge. AI techniques hold the key to teaching existing models to better mirror experimental models and to create an improved feedback loop between the experiments and models. The SambaNova system will help us create these cognitive simulations.”

In 2020, LLNL integrated SambaNova Systems' DataScale AI accelerator into the NNSA’s Corona supercomputing cluster, an 11-plus petaFLOP machine, allowing scientists to use AI calculations to more quickly and more efficiently conduct research on stockpile stewardship and Inertial Confinement Fusion applications, as well as for COVID-19 related research. The system was funded by the ASC program and is part of an agreement between the Department of Energy (DOE) and SambaNova Systems to accelerate AI within the DOE national laboratories.

LLNL researchers said the next stage of the collaboration will allow the heterogeneous system to be more loosely coupled with the Lab’s supercomputing clusters, supporting a wider selection of workloads and allowing LLNL to use a wider range of traditional resources, resulting in a more generalized solution that expands possible use-cases.

The new hardware is part of an effort to upgrade the Lab’s cognitive simulation (CogSim) program, which combines AI with high performance computing to accelerate traditional physics-based simulations."We are looking to leverage AI to improve speed, energy use and data motion,” said Bronis R. de Supinski, chief technical officer for Livermore Computing. “SambaNova has a different architecture than CPU or GPU-based systems, which we are leveraging to create an enhanced approach for CogSim that leverages a heterogeneous system combining the SambaNova DataScale with our supercomputing clusters.”

Rodrigo Liang, CEO of SambaNova Systems, added: “Scientific discoveries rely on speed, accuracy and collaboration. We’ve built an incredible partnership to solve complex scientific issues — together. We’re excited to leverage SambaNova’s agility, power and flexibility to fulfill Lawrence Livermore National Laboratory’s mission of science in the national interest.”

Contact

Jeremy Thomas

Jeremy Thomas

[email protected]

(925) 422-5539

Related Links

AI Innovation IncubatorLLNL Cognitive Simulation

Tags

ASCHPC, Simulation, and Data Science

Computing

Featured Articles