Computing codes, simulations helped make ignition possible

(Download Image)

(Download Image)

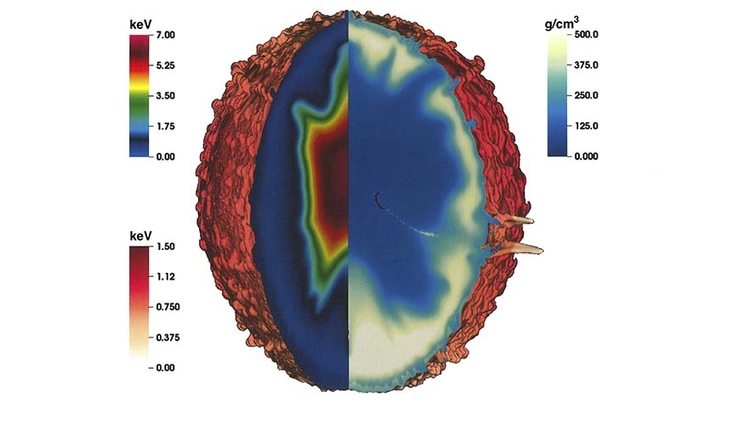

This graphic shows a high-resolution 3D HYDRA capsule simulation of a June 2017 NIF shot. The spherical contour surface shows the ablation front colored by ion temperature. The cutaway view shows density on the right where the capsule shell contains the hot spot. The jet from the fill tube is visible near the equator. Ion temperature is shown on the left. Credit: Marty Marinak.

Part 6 in a series of articles describing the elements of Lawrence Livermore National Laboratory's fusion breakthrough.

For Lawrence Livermore National Laboratory (LLNL) physicist George Zimmerman, and for the hundreds of physicists, computer scientists and code developers who have worked on fusion for decades, computer simulations have been inexorably tied to the quest for ignition.

Harkening back to the genesis of LLNL’s inertial confinement fusion (ICF) program, codes have played an essential role in simulating the complex physical processes that take place in an ICF target and the facets of each experiment that must be nearly perfect.

Many of these processes are too complicated, expensive or even impossible to predict through experiments alone. With only a few NIF laser shots per year to test target and experimental designs, computer modeling provides designers with valuable insights into which ideas are more likely to work.

Zimmerman’s one- and two-dimensional ICF code LASNEX was the first computer code to incorporate all the required physics for ICF and served as the foundation for the advanced high-resolution 3D codes that followed and are used to model all aspects of ICF today.

Those range from the specifications of the hohlraum — the cylindrical housing for the deuterium-tritium (DT) capsule that generates the thermonuclear reaction — to the behavior of the X-rays produced when the lasers hit their target, to implosion dynamics and the physics of a burning plasma.

Codes provide valuable information that can be used to analyze data and extrapolate predicted results.

“I think they’re instrumental, not only in bringing in funding, but also in guiding the design work in the right direction,” Zimmerman said. “If a simulation says a design won’t work, you can probably count on that. If a simulation is very difficult, that might be a hint to pursue a different path.”

LASNEX was first introduced in a celebrated 1972 Nature paper that presented the original concept that hydrogen fuel pelted with a high-powered laser could produce the elusive fusion burn. LASNEX was key to establishing funding for the numerous LLNL laser systems and became an essential tool on the path to ignition, helping scientists and target designers better understand increasingly complicated ICF experiments and the vital importance of implosion symmetry, stability and timing to a successful ignition.

Zimmerman won the 1997 Edward Teller Award for his work on LASNEX. He and his team — including longtime physicists David Bailey and Judy Harte and computer scientist Lee Taylor — actively maintain LASNEX today and are currently developing new models to assess remaining experimental anomalies. The code continues to be fundamental for target designers to “try things out” and provides insights into which designs could be successful.

“LASNEX has been benchmarked against laser and ICF experiments for 50 years so there’s some validity to the results,” Zimmerman said. “Because it’s been through all that, we know that it is reasonably complete. The code has lots of knobs, so we can turn a process on or off and ask, ‘Did that matter?’ We also have implemented many potentially important processes just to see if they matter. This is very useful information for other ICF code developers as they prioritize the implementation of various models.”

To many, including Lab computational physicist Michael “Marty” Marinak, NIF’s ignition achievement is all the evidence needed to prove codes were sufficiently “close” to reality to enable designers — working with the target fabrication team, experimentalists and the laser team — to guide the ICF program to success.

Marinak — the lead developer for HYDRA, the principal ICF code used today — said the exponential growth in high performance computing (HPC) at LLNL, along with improvements to HYDRA, allowed for the first high-resolution, fully spherical 3D simulations of ICF implosions.

In the run-up to ignition, LLNL physicist Dan Clark applied full-sphere simulations to ICF targets, giving scientists a much clearer understanding of what problems needed to be fixed to make ignition happen, including the relative impacts of various asymmetries and miniscule details of engineered features on the capsule, Marinak said.

In 2019 and 2020, a series of simulations performed with HYDRA told scientists that several sources of asymmetry in the implosion were acting in concert to limit performance and prevent ignition.

“When we started generating diagnostics from full-sphere 3D simulations, a lot of the mysteries went away,” Marinak said. “The simulations told us if you fixed just one of those issues, you probably wouldn't be able to measure an improvement. Even if you fixed all of them to within target fabrication’s abilities, you still wouldn't get the capsule to ignite. That was the turning point. We couldn’t just fix the sources of asymmetry. We needed a more robust target.”

HYDRA takes advantage of massively parallel computing, where large numbers of processing nodes work on pieces of a computational problem in parallel, allowing them to handle massive datasets and provide faster solutions. Imbued with decades of knowledge about targets and all the physics scientists know how to model in an ICF code, HYDRA is used to simulate the entire implosion in 3D.

The code models every aspect of the hohlraum, including up to 100 specifications for each target — the capsule’s dimensions and thickness, the smoothness of the ablator surface and the diameter of the fill tube used to inject the hydrogen fuel into the target. HYDRA is also used across the national fusion program to model various types of ICF targets, including direct-drive, heavy ion and magnetically driven targets.

Designers used HYDRA to make the target more robust, changing the hohlraum’s size and making the capsule thicker to take advantage of the boost in energy gained from bumping the NIF laser from 1.9 to 2.05 megajoules (MJ). They also reduced the laser entrance hole size to make the target more efficient — the fewer X-rays that escape the hohlraum, the more energy would impact the capsule. They called the target “Hybrid-E High-Energy (HyeHE).”

“The idea was to make a bigger target, but we had to make it bigger in a way that optimized all the desired properties — a task where simulations excel,” Marinak said. “The code was telling us, along with theory, that reducing the time between when the laser goes off and the capsule reaches peak compression velocity was the best place to use that (additional) energy. Then you’ve got to make the capsule thicker because you're burning off more of it. So how much thicker do you make it?”

HYDRA simulations provided an answer. With the new target specs and laser energy accounted for, and small adjustments to improve implosion symmetry, the ICF team made an integrated prediction — an ensemble of target simulations showing probabilities of the resultant energy yield. For the first time, the predictions told scientists that the likelihood the shot would achieve the elusive breakeven point was slightly greater than 50%.

Lead experimental designer Annie Kritcher also forecast a doubling of the 1.2 MJ yield that had been obtained from the first shot using the HyeHE design on Sept. 19, 2022. Both outcomes were as the code had predicted.

Based on the knowledge gained from HYDRA and other codes, Marinak was confident target design was “moving in the right direction” and would eventually result in higher yields.

“I always thought we would make it, but ignition is difficult and was harder to accomplish than we had thought, and that's probably why there’s no one else in the world that has done it,” Marinak said. “Now we have a much clearer understanding of the behavior of these implosions and the challenges that must be overcome. We promised that we could do this thing that no one else has done before for stockpile stewardship and for other purposes, and it was greatly rewarding to finally see that we helped to make that happen.”

Cavalcade of codes

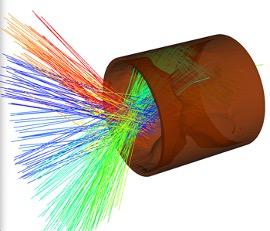

The multiphysics code KULL simulates laser beams shooting into the laser entrance hole of a gold hohlraum during an ICF experiment. Credit: Kevin Driver

With the road to ignition beset with multiple hurdles, a host of codes (and codes within codes) were brought to bear on the problem. Codes have been ingrained in the overall NIF design loop. They’re used to help design the experiment, to model the target capsule and iterate on designs, to answer questions from experimentalists and to understand what occurred post-experiment. The knowledge gained from each shot goes back into the codes to improve them.

The codes use material models and libraries to account for fundamental material properties and are essential to the success of the ICF models. In every case, the codes use high-fidelity equation of state (EOS) and opacity tables created by multi-disciplinary teams across the Lab. Further developing scientists’ understanding of material properties and improving these models is another necessary research and development activity to support ICF, requiring a strong partnership between the ICF designers and code teams.

“There are a lot of physics that go on in an ICF experiment, and a lot of it is highly nonlinear and very tightly coupled, so that makes it a complicated problem,” said Doug Miller, project manager for the ICF-related code KULL. “It has to work all together at the same time to get a pretty good approximation to reality. And it's got to run fast enough so the designers can come up with an idea, try a simulation, and get an answer in a day or a couple of days so they can iterate quickly before they try and build an experiment.”

KULL has made unique contributions to ICF and ignition through its three different methods for simulating radiation transport, as well as hydrodynamics, thermonuclear reactions, nonlinear thermal equilibrium (non-LTE) properties and turbulence, and by modeling the lasers’ ability to deposit energy in a realistic way. Capabilities originally spearheaded in KULL are being transferred to LLNL’s next generation effort, MARBL.

With its unique attributes, KULL can handle the turbulent fluid flows encountered in ICF experiments, making it a valuable computational tool for ICF.

In Miller’s view, the impact of codes on ignition has been “extremely large,” at times providing the only hint that there were many more puzzles to be solved before ignition could be realized.

“Codes were overpredicting performance for a long time, and the ‘why’ was an important mystery; it really let us know that there’s a lot going on here that we don't understand,” Miller said. “The ideas for a long time came fast and furious, and without the codes to weed out the ones that obviously didn’t work, we wouldn’t have gotten anywhere. It’s been very hand-in-glove with the design staff. It was a big shock to discover that all the little things needed to be modeled, and that it really did make a big difference.”

Other factors that needed to be accurately modeled were the behavior of the NIF laser and the fusion plasma. PF3D, a massively parallel specialized code developed by computational physicist Steve Langer and team, simulates the interaction between NIF’s high-intensity laser and the burning plasma that contains the fusion reactions. The interaction can scatter laser light in directions that experimentalists don't want. PF3D can model an entire laser beam as it hits the plasma, as well as cross-beam energy transfer, which is used to control the beams’ energy balance (see “Designing for Ignition: Precise Changes Yield Historic Results”).

CRETIN, developed as an astrophysics code to calculate the spectra coming from accretion disks around black holes, also proved extremely beneficial, predicting what the plasma inside the NIF target would do and what it would look like. The code has allowed researchers to improve the physics in the simulation codes and better match experimental results.

Capable of running in one, two and three dimensions, CRETIN is one of only a few codes in the world that can perform atomic physics and radiation transport under non-LTE conditions, such as the low-density, high-temperature and radiation environment found in the NIF target chamber. CRETIN evaluates atomic structure and transitions between atomic states during ICF experiments using atomic data.

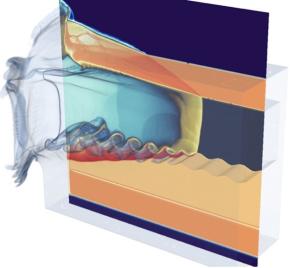

Researchers in 2018 used the code ARES and the Ascent library to perform a 98-billion-zone capsule instability calculation on LLNL’s Sierra, one of the world’s most powerful supercomputers. This image shows turbulent fluid mixing in a spherical geometry — part of a simulation of an idealized ICF implosion. Credit: Brandon Morgan

“To do that in detail accurately is an immensely large problem, which is difficult to do a single time,” said Lab physicist Howard Scott, lead developer of CRETIN. “It's not something that we can afford to do in the middle of another calculation. ICF needs good, but not highly detailed, atomic models which can be used a very large number of times during the ICF simulation, and that’s what CRETIN is providing and using during the simulations.”

In recent years, the improved atomic physics from CRETIN informed researchers that “we really needed to step up our game” and adapt, Scott said. Besides predicting radiation coming off the hohlraum walls, CRETIN has provided scientists with more detailed atomic data to model spectroscopic diagnostics. The atomic modeling code in CRETIN sits inside several radiation-hydrodynamic codes, including HYDRA and KULL and another core ICF code called ARES.

LLNL physicist and computer scientist Brian Pudliner, who served as a member of the ICF “red team,” leads the ARES project. The code was first used for ICF in 2005, and its unique ability to capture turbulent fluid mixing and its impact on physics has made it an invaluable resource for designing and analyzing HED and ICF experiments at LLNL. It is also applied to magnetically driven fusion in experiments at Sandia National Laboratory’s Z machine and to model debris within the NIF target chamber.

Pudliner said ARES has been working to extend its capabilities to model how the laser deposits the energy inside the hohlraum, where matter is driven to extreme states, investigating a complex interplay of multiphysics to capture how the energy is transported within the hohlraum.

“You have to be able to do that coupling between the plasma and the radiation field to model these experiments, and it's very challenging for the simulation,” Pudliner said. “ICF experiments start off at cryogenic temperatures and then very quickly, you have lasers turning things into plasmas and metal of the hohlraum heating up to high temperatures and emitting X rays, and to simulate that you really have to capture extremes of the conditions.”

While ARES didn’t directly model the capsule used in the Dec. 5 fusion shot, it did impact the processes behind the experiments and will continue to be used heavily as scientists test different capsule designs to increase energy yield. When he heard about the historic ignition shot, Pudliner said he was excited that ICF scientists had finally overcome daunting challenges.

“When you have spent time listening to all the struggles and the scientific uncertainty of what's going on [in ICF], when you get to the other side of that, it feels like, ‘We solved it. We got around those issues,’” Pudliner said. “That is just a very satisfying turn of events. There are some scientific problems that just persist forever, and you just feel like you never make any progress, and this was one where you could win. I thought, ‘Wow, that’s progress.’ That’s the kind of thing that you call a breakthrough.’ ”

Future of inverse design

Pudliner led an effort to port ARES over to graphics processing units (GPUs) on LLNL’s current flagship Sierra supercomputer and is enthusiastic for the potential of the upcoming exascale-class (1018 floating-point operations per second) El Capitan for future HED and ICF experiments. Scheduled for production in 2024, El Capitan’s ability to run more computationally expensive multiphysics than ever before, like those needed to model hohlraums or design experiments, will provide even more insight for ICF researchers, he said.

“There are areas that we haven’t really been able to make work on the GPU because there just hasn't been enough memory; El Capitan is going to change that,” Pudliner said. “There have been classes of problems that have worked very well on Sierra — we’ve seen enormous performance gains, and that’s changed how people work on those types of problems. With El Capitan, we’re going to see that happening in more application spaces related to NIF and high energy density physics.”

A MARBL 3D ALE radiation-hydrodynamics simulation of a laser-driven high energy density physics experiment. The model consists of 600 million quadrature points and ran on LLNL’s El Capitan Early Access System-3 computer RZVernal. Credit: Rob Rieben and Thomas StittAnother Lab scientist thrilled to work on El Capitan is Rob Rieben, a computational physicist who helped develop the ARES and ALE3D codes before beginning his own code, a next generation multiphysics (magneto-radiation hydrodynamics) pulsed-power code known as MARBL.

For ICF applications, MARBL adds multi-material hydrodynamics, equations for fusion reactions, and tracking temperatures for radiation, ions and electrons. What really sets the code apart from its predecessors is its higher-order algorithms, in which mesh elements have multiple degrees of freedom to “curve,” whereas low-order algorithms run with straight-edged elements.

Solving conservation laws on a mesh that moves with the fluid has potential advantages for modeling ICF because when a capsule implodes, it is squeezed by about a factor of 30 in radius, according to Rieben. Higher-order moving-mesh algorithms can model the resulting extreme fluctuations, turbulent flow and fluid instabilities with higher resolution and accuracy, Rieben said. In general, the more mesh elements in a simulation, the better the simulation can reflect reality. High-order mesh elements also can converge faster, resulting in quicker solutions than lower-order methods, he said.

“Think of your mesh as a bunch of Legos,” Rieben said. “A traditional Lego just has straight edges; it doesn't bend. But our elements have curvature built into them. They have more resolving power; they track more features per element than a lower-order code.”

Rieben and his multidisciplinary team of physicists, computer scientists, engineers and mathematicians have been working to port MARBL and its million-plus lines of code to run on GPUs, first with Sierra, and now for the upcoming exascale El Capitan.

“What we consider heroic today will be commonplace on El Capitan,” Rieben said. “That’s one of our goals — that thing that maybe only one person could do over the course of a long period of time, now a lot more people can do in a very short time.”

Other implications for ICF will be the ability to run high-fidelity 3D ensembles (collections of simulations) to answer multiple scientific questions at once and perform unprecedented uncertainty quantification and machine learning (ML) studies, Rieben said. The capability opens the door to ML-backed design optimization, giving researchers an expanded design space exploration tool to create more robust targets.

For the first time, scientists also could create 3D ML surrogate models, trained on thousands of ICF simulations, to perform “inverse design” of target capsules, where AI techniques are used to back-engineer optimal target initial conditions and drives based on the desired yield output.

“I'm really excited about the idea of inverse design for multiphysics simulation,” Rieben said. “MARBL is extremely well-suited for this work, and we've already got some examples of doing this at small scale, and we’re looking forward to scaling that up.”

- See Part 1: Star power: Blazing the path to fusion ignition

- See Part 2: Designing for ignition: Precise changes yield historic results

- See Part 3: Ignition experiment advances stockpile stewardship mission

- See Part 4: Laser focused: Power and finesse drove NIF’s fusion ignition success

- See Part 5: NIF’s optics meet the demands of increased laser energy

Next Up: “Diagnostics were crucial to NIF’s historic ignition shot”

Contact

Jeremy Thomas

Jeremy Thomas

[email protected]

(925) 422-5539

Related Links

Full-sphere simulationsBoost in energy

First shot

"Designing for Ignition: Precise Changes Yield Historic Results”

Tags

HPC, Simulation, and Data ScienceComputing

Lasers and Optical S&T

Lasers

National Ignition Facility and Photon Science

Science

Featured Articles