LAB REPORT

Science and Technology Making Headlines

April 5, 2024

Corn scraps are one form of biomass that can be made into alternative fuels. Photo courtesy of USDA.

Biomass is where it’s at

Scraps of biomass are rich in the carbon dioxide that plants absorb during photosynthesis, presenting an opportunity for those looking to take on climate change. Companies and scientists have dozens of ideas for how nature’s leftovers might help the planet. They can be made into alternative fuels for airplanes and cargo ships to displace petroleum. They can be turned into chemical products, transformed into hydrogen or used to nourish farmland.

Today, the United States uses roughly 340 million dry tons of biomass per year, very little of which is from farm and forestry waste. Nearly all of the nation’s supply comes from corn crops, wood chips and, to a lesser extent, landfill gas.

Lawrence Livermore’s “Roads to Removal” study analyzed how the U.S. could remove and store 1 billion metric tons of CO2 every year. Getting to “gigaton-scale” carbon removal is considered key to meeting the nation’s goal of net-zero emissions by 2050.

The country could potentially get 700 million metric tons of CO2 removal per year by 2050, using only biomass wastes and residues from forest-thinning practices, according to the report.

“With the companies that are looking to just literally bury [biomass], it’s kind of a wasted opportunity,” said Jennifer Pett-Ridge, a senior staff scientist at Lawrence Livermore and lead author of the Roads to Removal report. “It’s good in the sense that you’re removing CO2. But there are so many other materials that can be made out of biomass that benefits society and financially creates an industry.”

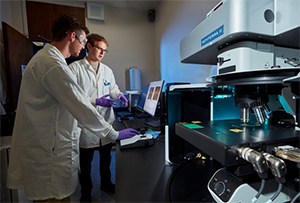

In an LLNL laboratory, Zachary Murphy (front) and his mentor Gauthier Deblonde (back) discuss the results of the chemical analyses conducted on the underground rock samples using Raman spectroscopy (the equipment pictured on the black table).

Digging deep

The groundbreaking discoveries and scientific advancements that take place at Lawrence Livermore National Laboratory (LLNL) and across the broader national laboratory system rely on passing information from tenured staff scientists to new interns and early career scientists.

In summer 2023, Zachary Murphy, a Ph.D. student studying chemistry at the University of Central Florida, interned with LLNL’s Glenn T. Seaborg Institute under the mentorship of Gauthier Deblonde, a radiochemist for the nuclear and chemical sciences division.

“Despite there being a persistent and global demand for radiochemists, there has been a decline in those entering the field,” Deblonde said. “Providing young and motivated students like Zach the opportunity to conduct research alongside Livermore staff scientists is key to bridging knowledge gaps in radiochemistry.”

Murphy’s summer research focused on evaluating an organic-rich rock formation. As part of his work, he aimed to characterize which minerals are present and how radioactive elements interact with different rock layers. This work is a part of a larger, ongoing project to evaluate new locations for a potential nuclear waste repository used to store legacy waste deep underground.

According to LLNL, the ideal repository site would have natural barriers to stop the nuclear waste from spreading into the environment since engineered barriers (such as metallic canisters) slowly break down over time due to corrosion and other natural phenomena.

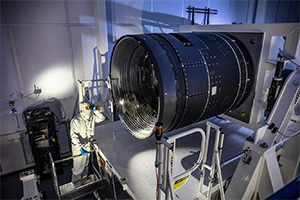

The lenses for the Legacy Survey of Space and Time camera have been built at Lawrence Livermore National Laboratory. Photo courtesty of Jacqueline Ramseyer Orrell/SLAC National Accelerator Laboratory.

Eye in the sky

Scientists and engineers have announced the completion of the Legacy Survey of Space and Time (LSST) – the largest camera ever built. Taking almost two decades to build, the 3,200 megapixel instrument will form the heart of the 8.4 m Simonyi Survey Telescope based at the Vera C. Rubin Observatory in Cerro Pachón in the Andes.

First proposed some three decades ago to help study the nature of dark matter, the LSST has been built at the SLAC National Accelerator Laboratory. It is 3 × 1.65 m – roughly the size of a small car – and with a mass of 3000 kg.

The LSST includes three lenses, which have been constructed at Lawrence Livermore National Laboratory. The biggest being 1.57 m in diameter and is the largest high-performance optical lens ever made. The LSST has now completed a program of rigorous testing and will be shipped to Chile where it will be installed atop the Simonyi Survey Telescope later this year.

The camera’s resolution of 3,200 megapixel – some 200 times larger than a high-end consumer camera – means that it can take hundreds of ultrahigh-definition TVs to display just one of the LSST’s images at full size.

LLNL researchers are looking into how wine could sequester carbon. Photo courtesy of USDA National Institute of Food and Agriculture.

Carbon capture wines down

Wineries may soon play a significant part in carbon sequestration, thanks to the work of local scientists.

A team at Lawrence Livermore National Laboratory (LLNL) recently completed a proof-of-concept demonstration for the idea at the Continuum Estate winery in St. Helena. The project captured off-gasses from fermenting wine grapes and then mineralized the carbon dioxide, keeping the carbon out of the atmosphere.

Fermenting wine grapes in California alone produce some 450,000 tons of carbon dioxide per year, according to LLNL, equivalent to the annual emissions of almost 100,000 cars.

To achieve our mid-century climate goals and avert the more disastrous effects of climate change, the world must remove carbon from the air in addition to reducing emissions, according to an LLNL carbon-removal report released last year. Toward that end, the U.S. must remove carbon on the scale of a billion metric tons per year by 2050.

One promising avenue for carbon removal involves rethinking how industries handle biomass — the organic material created through agriculture.

“Plants have done the work of taking the sun’s energy and putting that into chemical energy, into the sugar,” said LLNL staff scientist Nathan Ellebracht. “Fruit is really packed with sugar. That is where the CO2 from the atmosphere is going; it’s going into the sugar that is in the fruit.”

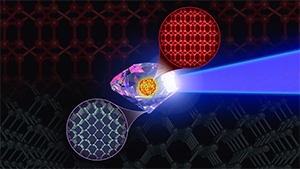

Supercomputer simulations predicting the synthesis pathways for the elusive BC8 “super-diamond,” involving shock compressions of diamond precursor, inspire ongoing Discovery Science experiments at NIF. Image by Mark Meamber/LLNL.

Putting the squeeze on diamond

Simulations of an elusive carbon molecule that leaves diamonds in the dust for hardness may pave the way to creating it in a lab.

Known as the eight-atom body-centered cubic (BC8) phase, the configuration is expected to be up to 30% more resistant to compression than diamond – the hardest known stable material on Earth.

Physicists from Lawrence Livermore ran quantum-accurate molecular-dynamics simulations on a supercomputer to see how diamond behaved under high pressure when temperatures rose to levels that ought to make it unstable, revealing new clues on the conditions that could push the carbon atoms in diamond into the unusual structure.

The BC8 phase has previously been observed here on Earth in two materials, silicon and germanium. Extrapolating the properties of BC8 seen in those materials has allowed scientists to determine how the phase would manifest in carbon.

Carbon’s BC8 phase doesn’t exist on Earth, though it is thought to lurk out in the cosmos in the high-pressure environments deep inside exoplanets. Theory suggests it’s the hardest form of carbon that can remain stable at pressures beyond 10 million times Earth’s atmospheric pressure. If it could be synthesized and stabilized closer to home, it would open up some amazing research and material application possibilities.