Predicting wind-driven spatial patterns of atmospheric pollutants

(Download Image)

(Download Image)

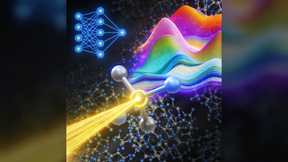

Schematic progression of the proposed data preprocessing for machine learning training. This work represents the first step towards leveraging real-world images for plume prediction.

For centuries, scientists have observed nature to understand the laws that govern the physical world. Despite the slow, traditional process associated with turning observations into physical understanding, powerful new algorithms can enable computers to learn physics by observing images and videos.

LLNL researchers are working to leverage this concept to predict spatial patterns influenced by wind-driven dynamics. This includes phenomena commonly found in geosciences, for example, algal blooms in the ocean surface, Aeolian sand dunes, volcanic ash, wildfire smoke, air pollution plumes, and more. While satellites are used to obtain images of these events daily, using them to build predictive machine learning models has not been thoroughly exploited yet.

To establish a proof-of-concept, the team conducted a study where they trained machine learning models using images (i.e., pixel information) instead of physical quantities to simulate the physics behind the wind-driven spatial patterns. The researchers used deposition data from an existing set of thousands of computational fluid dynamics simulations. With this data, they implemented deep learning algorithms (in this case, convolutional neural network-based autoencoders) to predict the spatial patterns associated with plumes (the dispersion of liquid, gas, or dust from a source) blowing in different directions and originating from different locations. In this approach, the model used an encoder to receive an image and compress its dimensionality to 0.02% of the original size, and a decoder to recover the original image from the reduced space.

For the present study, the team focused on predicting only static spatial patterns (i.e., patterns that do not vary with time), a simpler problem compared to what they hope to accomplish in the future—that is, developing image-based, data-driven models to predict the spatiotemporal evolution of real-world plumes that are directly observable with cameras.

[M.G. Fernández-Godino, D.D. Lucas, and Q. Kong, Predicting wind-driven spatial deposition through simulated color images using deep autoencoders, Scientific Reports (2023), doi: 10.1038/s41598-023-28590-4.]

Tags

Earth and Atmospheric ScienceAtmospheric, Earth, and Energy

Physical and Life Sciences

Featured Articles