Lab helped unleash power of SC

From the unwieldy Univac-1 kiloFLOP system with its vacuum tubes, installed in April 1953, to today’s BlueGene/L 596-teraFLOP machine, the Laboratory has been heavily vested in high-performance computing throughout its 56-year history. Given this early commitment to the development of high-end computing systems for advancing science and national security, it was no accident that Laboratory scientists played a key role in founding what is today the world’s premier High-Performance Computing (HPC) conference — originally called Supercomputing and now referred to as SC.

This week, the global HPC community gathered in Austin, Texas, to celebrate the 20th anniversary of SC under the theme "Unleashing the Power of HPC." Since the first conference in 1988, held in Orlando, Fla., high-performance computing has undergone a technological revolution with more than a million-fold increase in the power of computing systems and, in the process, changed the scientific method as defined by Isaac Newton more than 300 years ago. The advent of teraFLOP/s (trillions of floating operations per second) systems has made simulation a peer to theory and experiment in scientific discovery.

"The supercomputing conference was in many respects a product of increasingly rapid change in high-performance computing. But it also has become a catalyst for that change," said Dona Crawford, associate director for Computation at LLNL, who has been an organizer and participant since SC88.

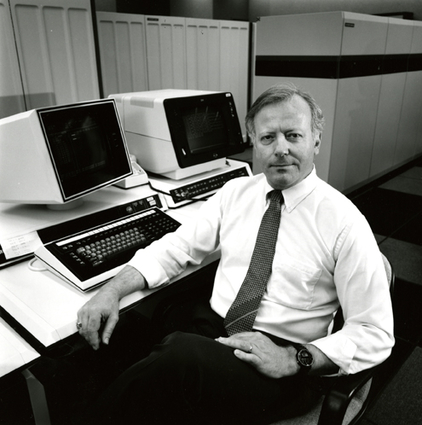

John Raneletti, who was the head of what was then the Computation Department, recalls that HPC was at a crossroad in the late 1980s and that the Lab’s computing science leaders, notably George Michael, Bob Borchers and Steve Lundstrom, recognized the need for a new direction, one catalyzed by a meeting of the best minds in industry, academia and the national labs.

"We saw a growing need for leaders in the field to get together and exchange ideas," said Raneletti, who served in a number of leadership roles for the conference from 1988 to 2000. "George (Michael) knew a lot of people from industry, academia and the labs and he was well-known and respected in the field; he had a talent for bringing people together that made him uniquely qualified to organize the first conference."

Michael, who died earlier this year, was the subject of a video tribute at this week’s conference. To read the Newsline obituary, go to the Web.

In 1988, the fastest computers at the Lab were the Cray Y-MPs at just more than 1 gigaFLOP (millions of floating operations per second), about what an average desktop system has today. But the limitations of the Cray vector and classic mainframe designs had computer scientists looking for new solutions. Earlier in the decade, scientists began exploring the idea of new high-performance architectures — what would evolve into massively parallel processing — a "radical" idea at the time, even in the HPC community.

Eugene Brooks was hired at the Lab in 1983 out of Caltech to explore parallel processing as a new direction and quickly became convinced the future of high-performance computing lay in many processors working in parallel, not the traditional mainframe technology that relied on small numbers of large and expensive central processing units (CPUs).

In a 2003 interview, Brooks said early proponents of parallel processing were viewed as "heretics" and in the early 1980s were often "laughed out of talks." But, the increasing availability of cheaper, more reliable microprocessors fueled the evolution of parallel processing. Brooks delivered a presentation at Supercomputing ‘89 entitled "Attack of the Killer Micros," describing the technological shift toward massively parallel computing.

Some of the first machines of this design at the Lab were the Meiko and the Bolt, Beranek and Newman (BBN) Butterfly, so called because its communication network topology resembled a butterfly, brought online in 1991, according to Brooks.

Mark Seager, who today leads the Lab’s effort to develop new HPC systems, recalls that the early parallel systems were dedicated to code development and otherwise pushing parallel capabilities. This turned out to be important in laying the foundation for DOE Defense Programs Accelerated Strategic Computing Initiative (ASCI), launched in 1995 (before the creation of the National Nuclear Security Administration, when the effort evolved into today’s Advanced Simulation and Computing).

It was the time-urgent ASCI effort to develop the computing capability needed for simulating nuclear weapons performance that pushed the pedal to the metal for high-performance computing and, as a result, energized the supercomputing conference, Seager said. "High-performance computing was in a slump and American competitiveness in the field was in question when ASCI started. ASCI revitalized computing at the national labs and accelerated everyone in the HPC community."

In 1993, an organization called the Top500 began ranking supercomputing systems using the LINPACK benchmark and issuing a list twice a year, one in June and one at the November supercomputing conference. The release of the list has added an element of competitive drama to the annual gathering and, in recent years, underscored how international the HPC community has become. This year 53 countries were represented at the conference. (More than 32 companies are represented on the Top500 list.)

At the core of the conference’s long-term success has been the strong leadership provided by the conference chairs in maintaining a dynamic and topical technical program, introducing new elements, expanding participation and keeping the showroom exhibit by vendors in balance with the technical exchanges, according to Dave Cooper, former associate director for Computation. Elements added in recent years include expanded educational outreach and programs, a job fair and additional competitive challenges such as the Cluster Challenge, in which teams of students work around the clock for 48 hours to build and run a high-performance computer.

Cooper, who has played a leadership role in the conference’s development since the beginning, said organizers have paid attention to what participants want and expect from the conference. "Many people have told me that this is the only conference they go to. All of the vendors they work with are there. They get to talk to the participants from the national labs, academia and industry," Cooper said. "They don’t have to justify their attendance. It’s all here."

In a video retrospective shown during the SC08 opening session, veterans of the conference marveled at its growth and described SC as being all at once, a county fair of computing, a reunion of the ever-growing HPC family and a crystal ball, providing a view of how nascent technologies will further transform the field.

Undeterred by the current economic downturn, attendees turned out in record numbers for SC08 in Austin — more than 11,000. Excitement for SC2009 has already begun, as noted by the call for participation for next year’s conference and the exhibitor’s space selection. "The future of the conference is clearly very bright," Cooper said.