Going deep: Lab employees get an introduction to world of machine learning, neural networks

(Download Image)

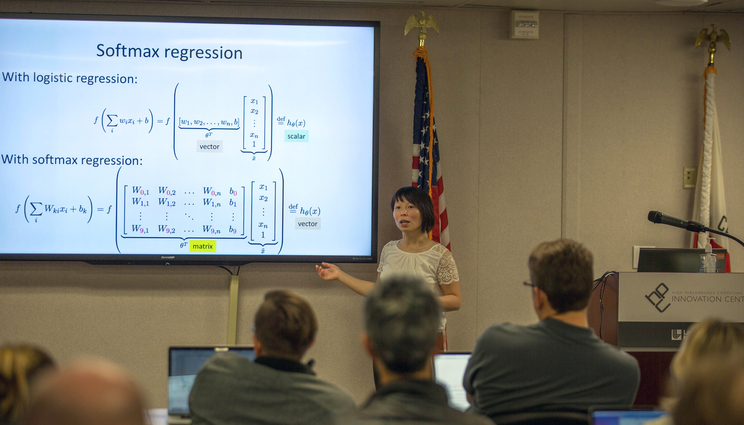

Machine learning scientist Brenda Ng taught a “Deep Learning 101” course, which introduced the basics of neural networks and deep learning to anyone with a basic knowledge of programming in Python. Photos by Julie Russell/LLNL

(Download Image)

Machine learning scientist Brenda Ng taught a “Deep Learning 101” course, which introduced the basics of neural networks and deep learning to anyone with a basic knowledge of programming in Python. Photos by Julie Russell/LLNL

Deep learning is one of the most popular and widely used machine learning methods due to its success with autonomous vehicles, speech recognition and image classification, to name a few emergent technologies. But what exactly is deep learning, and how can it best be applied to Lab projects?

Lawrence Livermore National Lab (LLNL) employees discovered the answers during a recent "Deep Learning 101" course, which introduced the basics of neural networks and machine learning to anyone with a basic knowledge of programming in Python. The five-part series, which concluded Jan. 31, included lectures, demonstrations and labs, where attendees performed hands-on work in coding and training their own neural networks. The teaching staff included CED machine learning scientist Brenda Ng, GS-CAD data scientist Luke Jaffe and CED computer vision scientist Nathan Mundhenk, with support from teaching assistant and CED machine learning engineer Sam Nguyen.

"There are many efforts around the Lab that are doing deep learning, but we want to make it more accessible to everybody," Ng said. "Not everybody has a machine learning background, and there’s just so much information out there, in terms of tutorials and blogs, and you’re not sure which ones to even look at. The goal is to give more practical knowledge to our scientists here, so they’re not just reading about it, but they will feel empowered to try some of these models and tools in their work."

The learning series consisted of five 75-minute classes. Each class started with a lecture by Ng that covered the deep learning topic of the day. The lecture was followed by a programming lab taught by Jaffe that explained the actual code (in the Python machine learning library PyTorch) to implement the model covered in the lecture.

"We wanted to show how easy deep learning can be if you use the right tools," Jaffe said. "We wanted to remove the stigma that deep learning is a complicated black box that can only be done with supercomputers. The labs are designed to show that employees can get tangible improvements to their work, running on a laptop, starting right now."

Session topics included convolutional neural networks, generative adversarial networks, autoencoders and recurrent networks. The last class covered a practical tutorial on using Livermore Computing resources to train deep learning models, taught by Mundhenk. The course material was adopted from deep learning workshops and open-source code examples, interweaving the staff’s personal deep learning experiences to afford Lab employees with a quality learning experience.

In all, 91 people signed up for the course, which was originally conceived as a machine learning series for the Engineering postdocs and was fully funded by the Engineering postdoc program. However, due to overwhelming demand, the series was opened to anyone who was interested and had some familiarity with Python programming and library installations, as well as basic calculus and linear algebra.

"The Engineering postdoc program is pleased to be able to support this kind of skill expansion and mentorship at the Laboratory," said Engineering postdoc program coordinator Nathan Barton. "Building abilities to work across disciplines is a great way to invest in workforce development. And people's sustained interest in expanding their knowledge helps to make the Lab a great place to work."

Wenqin Li, a new postdoc in the energy utilization and delivery group in CED Engineering, attended the entire series and said it Inspired her to find new ways to help solve energy problems.

"DL101 is a great class for researchers who are a strong believer of applying machine learning to help solve their engineering problems," Li said. "Brenda did a great job by explaining the most complex terms in the simplest way. Luke also designed very interesting lab demos too, which provides us great hands-on experience."

MED postdoc Eric Elton said he wanted to take the course because had no knowledge of machine learning or AI but thought that getting a better grasp of the fundamentals would help him be better able to work with others who program machine learning methods.

"I have a much better grasp of how ML works now, not just from a programming perspective but also from a fundamental perspective," Elton said. "The course has really taken the mystery out of what exactly the computers are doing when someone says, ‘we used AI to do x.’ I’m also much more aware of the limits of machine learning now than I was before the course, and why I can’t just ask the computer to solve some equations in the context of this data, or something like that."

Another Deep Learning 101 attendee, Farid Dowla, said: "This has been really an excellent class. It’s been a solid discussion on theory, techniques, tools and literature. It will benefit many at LLNL and certainly myself. I have been in this area for more than 20 years, and I can see how things have changed recently. I highly recommend the staff in this class. We need to have more of these."

Contact

Jeremy Thomas

Jeremy Thomas

[email protected]

(925) 422-5539

Related Links

Computation at LLNLEngineering at LLNL

Tags

HPC, Simulation, and Data ScienceComputing

Engineering

Featured Articles