Exascale in motion on earthquake risks

(Download Image)

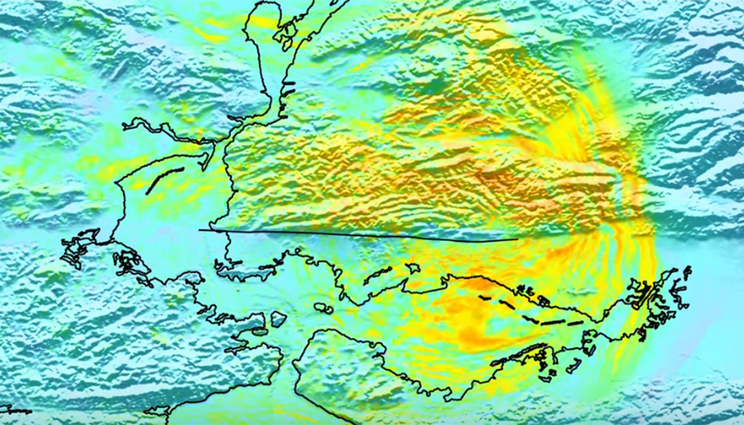

The (left) peak ground velocity (“ShakeMap”) and (right) ground velocity time-histories of the Hayward Fault at selected sites around the Bay Area.

(Download Image)

The (left) peak ground velocity (“ShakeMap”) and (right) ground velocity time-histories of the Hayward Fault at selected sites around the Bay Area.

Assessing large magnitude (greater than 6 on the Richter scale) earthquake hazards on a regional (up to 100 kilometers) scale takes big machines. To resolve the frequencies important to engineering analysis of the built environment (up to 10 Hz or higher), numerical simulations of earthquake motions must be done on today’s most powerful computers.

The algorithms and codes that run on today’s petascale supercomputers, with tens of thousands of cores, must be modified to reliably perform calculations on hundreds of thousands to millions of cores. Earthquake hazard simulation is an exascale problem, requiring a billion billion calculations per second

To capture precisely the detailed geology and physics of earthquake motions, and how the shaking impacts buildings, Lawrence Livermore (LLNL) and Lawrence Berkeley (LBNL) scientists are building an end-to-end simulation framework. This work is part of the DOE's Exascale Computing Project (ECP), which aims to maximize the benefits of exascale systems -- future supercomputers that will be 50 times faster than the nation's most powerful system today -- for U.S. economic competitiveness, national security and scientific discovery.

"Our goal is to develop the algorithms and computer codes necessary to exploit the DOE’s march toward exascale platforms and to calculate the most realistic possible earthquake motions," LLNL seismologist Artie Rodgers said.

Earthquake ground motions pose an ever-present risk to homes, buildings and infrastructure such as bridges, overpasses and roads. However, many urban areas are close to active earthquake faults and sedimentary basins that amplify seismic motions. However, many urban areas at risk for strong ground movement haven’t experienced damaging motions due to long time intervals between large earthquakes. Ground motions are especially strong in the near-source region (less than 10 kilometers) of large events (magnitude 7.0 and higher). Furthermore, ground motions are specific to each site due to unique fault geometry and sub-surface geologic structure that impacts seismic waves.

The response of buildings, bridges and other engineered structures in the near-fault region depends heavily on the nature of ground motions. For example, in the 1992 Landers, California quake, the near fault motions show a strong one-sided velocity pulse and step in displacement that produces strong forcing to building foundations, to the point of potentially damaging structural components of the building. The research appears in a recent edition of the Institute of Electrical and Electronics Engineers (IEEE) Computer Society's Computers in Science and Engineering.

Traditional empirically based earthquake hazard estimates depend on ground motion prediction equations. However, there are very few measurements of large earthquakes at close distances, hindering modeling efforts that try to capture the true nature and variability of near-fault motions. In the absence of site-specific empirical data, numerical simulation is attractive to predict ground motions based on a range of model inputs. "Ultimately, we need to run large suites of simulations to sample variability of the earthquake rupture and sub-surface structure. So, we need efficient algorithms to run on the fastest machines," Rodgers said.

Current simulations resolve ground motions at about 1 hertz (vibrations per second). The recent paper shows simulations up to 2.5 hertz. Since then, the team has run simulations to 4-5 Hz.

CASC computational mathematicians Anders Petersson and Bjorn Sjogreen are introducing fine-grained parallelism and mesh refinement in the existing SW4 (seismic waves 4th order) code—which simulates seismic wave propagation. To test the codes current capabilities and eventual scaling up, the team performed regional scale simulations of a magnitude (M) 7.0 earthquake on the Hayward Fault. The Hayward Fault is capable of earthquakes of this size, including the last known rupture (estimated M 6.8) on Oct. 21, 1868. Strong shaking was experienced throughout the East Bay, but recording instruments weren’t yet developed. The current seismic hazard assessment for Northern California identifies the Hayward Fault as the most likely to rupture with a M 6.7 or greater event before 2044.

"The key goal in going to exascale is to develop computationally efficient algorithms for new architectures to computer ground motions with enough frequency resolution to assess engineering risks to critical infrastructure," Rodgers said. "Right now, we can’t quite do that, but the gap is closing with recent developments."

As part of the Exascale Application Development project, the team will port the SW4 code to new platforms and architectures and perform optimizations necessary to take full advantage of hardware enhancements. They have had success running on LBNL’s Cori Phase-II and Livermore Computing’s Quartz supercomputers.

Rodgers and Petersson’s work is funded by the DOE Exascale Computing Project, a project jointly supported by the DOE Office of Science and the National Nuclear Security Administration that seeks to provide breakthrough modeling and simulation solutions through exascale computing.Sjogreen is funded by a DOE Center of Excellence project.

Contact

Anne M. Stark

Anne M. Stark

[email protected]

(925) 422-9799

Related Links

Computers in Science and EngineeringSimulating the south Napa earthquake

Recreating the 1906 San Francisco earthquake

Tags

Physical and Life SciencesFeatured Articles